Quite recently, Mint reported that intelligence agencies of the three nations – India, UK and US – did not pull together all the strands gathered by their high-tech surveillance tools, which might have allowed them to disrupt the 2008 terror strike in Mumbai. Would data mining of the trove of raw information, along the lines of US National Security Agency’s PRISM electronic surveillance program, have helped in connecting the dots for the bigger picture to emerge? As leaked by Edward Snowden, NSA has been operating PRISM since 2007 to look for patterns across a wide range of data sources, spanning multiple gateways. PRISM’s Big Data framework aggregates structured and unstructured data including phone call records, voice and video chat sessions, photographs, emails, documents, financial transactions, internet searches, social media exchanges and smartphone logs, to gain real-time insights that could help avert security threats.

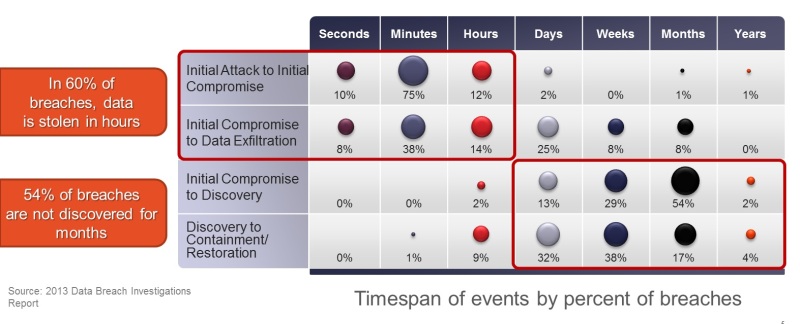

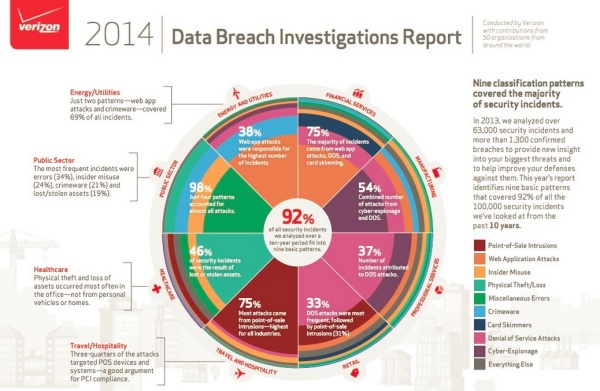

In the business world, information compiled in a relatively recent Verizon Data Breach Investigations Report points out that data is stolen within hours in 60% of breaches, but goes undetected for months in 54% of overall breaches. With the mounting pace of attacks, and increasing IT attack surface due to constant influx of new technologies such as cloud computing, BYOD and virtualization, CISOs are looking for real-time data analytics to improve threat defense through better controls, reliably detect an incident and quickly contain the breach before it inflicts an inordinate amount of damage, and also provide insight into extent of data exfiltration to quantify damages and potentially remediate the situation, without aggravating security staff shortages already faced by most organizations.

Figure – Timespan of Breach Detection (Source: Verizon)

The era of big data security analytics is already upon us with large organizations collecting and processing terabytes of internal and external security information. Internet businesses such as Amazon, Google, Netflix and Facebook who have been experimenting with analytics since early 2000s might contend that Big Data is just an old technology in a new disguise. In the past, ability to acquire and deploy high-performance computing systems was limited to large organizations with dire scaling needs. However, technological advances in the last decade – which have rapidly decreased the cost of compute and storage, increased the flexibility and cost-effectiveness of data centers and cloud computing for elastic computation and storage, and development of new Big Data frameworks which allow users to take advantage of distributed computing systems storing large quantities of data through flexible parallel processing – and major catalysts such as data growth and longer retention needs have made it attractive and imperative for many different types of organizations to invest in Big Data Analytics.

How do traditional Security Analysis tools fare?

Over the decades, security vendors have progressively innovated on multiple aspects to – (1) protect endpoints, networks, data centers, databases, content and other assets, (2) provide risk and vulnerability management that meet governance requirements, (3) ensure policy compliance with SOX, PCI, HIPAA, GLBA, DSS and other regulations, (4) offer identity/access/device management, while aiming (5) to provide a unified security management solution that allows for configuration of its security products, and offers visibility into Enterprise security through its reporting capability. While most individual security technologies have matured to the point of commoditization, security analysis remains a clumsy affair that requires many different tools (even in SIEM deployments, that I will elaborate on below), most of which do not interoperate due to the piecemeal approach of security innovation and multi-vendor security deployments.

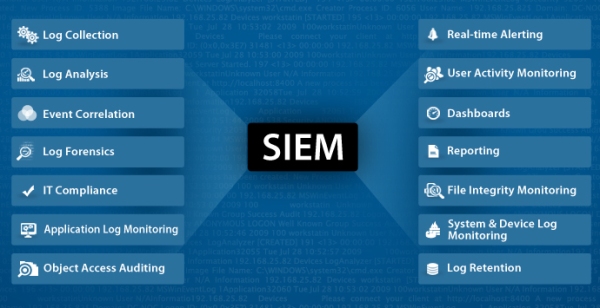

Today, security analysts often rely on tools such as Log Management solutions and Security Information and Event Management (SIEM) systems for network security event monitoring, user monitoring and compliance reporting – both of which focus primarily on the collection, aggregation and analysis of real-time logs from network devices, operating systems, and applications. These tools allow for parsing and search of log data for trends, anomalies and other relevant information for forensics, though SIEMs are deemed to be more effective for forensics given its event reduction capability.

Log Management solutions from few prominent vendors are AlienVault USM, AlertLogic Log Manager, McAfee Enterprise Log Manager, LogRhythm, HP ArcSight ESM, Splunk, SolarWinds Log & Event Manager, Tenable Log Correlation Engine, ElasticSearch ELK, SawMill, Assuria Log Manager, BlackStratus Log Storm and EiQNetworks SecureVue. You’ll find a more complete list along with details on these offerings here.

Let me now elaborate on how Log Management & SIEM solutions differ, and point out few misgivings of SIEM solutions (apart from its price, of course).

While a SIEM solution is a specialized tool for information security, it is certainly not a subset of Log Management. Beyond log management, SIEMs use correlation for real-time analysis through event reduction, prioritization, and real-time alerting by providing specific workflows to address security breaches as they occur. Another key feature of SIEM is the incorporation of non-event based data, such as vulnerability scanning reports, for correlation analysis. Let me decipher these features unique to SIEMs.

- Quite plainly, correlation is to look for common attributes and link events together into meaningful categories. Data correlation of real-time and historical events allows for identification of meaningful security events, among massive amount of raw event data with context information about users, assets, threats and vulnerabilities. Multiple forms of event correlation are available – e.g. a known threat described by correlation rules, abnormal behavior in case of deviation from baseline, statistical anomalies, and advanced correlation algorithms such as case-based reasoning, graph-based reasoning and cluster analysis for predictive analytics. In the simplest case, rules in SIEM are represented as rule based reasoning (RBR) and contain a set of conditions, triggers, counters and an action script. The effectiveness of SIEM systems can vary widely based on the correlation methods that are supported. With SIEMs – unlike in IDSs – it is possible to specify general description of symptoms and use baseline statistics to monitor deviations from common behavior of systems and traffic.

- Prioritization involves highlighting important security events over less critical ones based on correlation rules, or through inputs from vulnerability scanning reports which identify assets with known vulnerabilities given their software version and configuration parameters. With information about any vulnerability and asset severity, SIEMs can identify the vulnerability that has been exploited in case of abnormal behavior, and prioritize incidents in accordance with their severity, to reduce false positives.

- Alerting involves automated analysis of correlated events and production of alerts, based on configured event thresholds for incident management. This is usually seen as the primary task of a SIEM solution that differentiates it from a plain Log Management solution.

The below figure captures the complete list of SIEM capabilities.

Figure – SIEM Capabilities (Source: ManageEngine.com)

SIEM solutions available in the market are IBM Security’s QRadar, HP ArcSight, McAfee ESM, Splunk Enterprise, EMC RSA Security Analytics, NetIQ Sentinel, AlientVault USM, SolarWinds LEM, Tenable Network Security and Open Source SIM which goes by the name of OSSIM. Here is probably the best source of SIEM solutions out in the market, and how they rank.

Real-world challenges with SIEMs

- SIEMs provide the framework for analyzing data, but they do not provide the intelligence to analyze that data sensibly and detect or prevent threats in real-time. The intelligence has to be fed to the system by human operators in the form of correlation rules.

- The correlation rules look for a sequence of events based on static rule definitions. There is no dynamic rule generation based on current conditions. So, it takes immense effort to make and keep it useful. The need for qualified experts, who can configure and update these systems, increases the cost of its maintenance even if we were to assume that such expertise is widely available in the industry.

- SIEMs throw up a lot of false notifications when correlation rules are used initially, which prompt customers to even disable these detection mechanisms. SIEMs need to run advanced correlation algorithms using well-written specific correlation rules to reduce false positives. The sophistication of these algorithms also determines the capability to detect zero-day threats, which hasn’t been the forte of SIEM solutions in the market.

In spite of these challenges, a market does exist for these traditional tools, as both SIEM and Log Management play an important role in addressing organizational governance and compliance requirements, related to data retention for extended periods or incident reporting. So, these solutions are here to stay, and be in demand atleast by firms in specific industry verticals and business functions. Also, SIEM vendors have been exploring adjacent product categories – the ones I will cover below – to tackle the needs of the ever-so dynamic threat landscape.

Is Security Analytics a panacea for security woes?

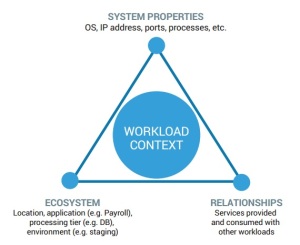

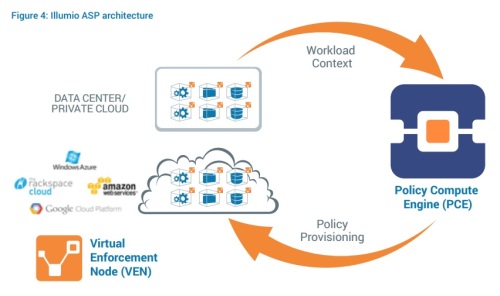

With the current threat landscape increasingly featuring Advanced Persistent Threats (APTs), security professionals need intelligence-driven tools that are highly automated to discover meaningful patterns and deliver the highest level of security. And there we have all the key ingredients that make up a Security Analytics solution!

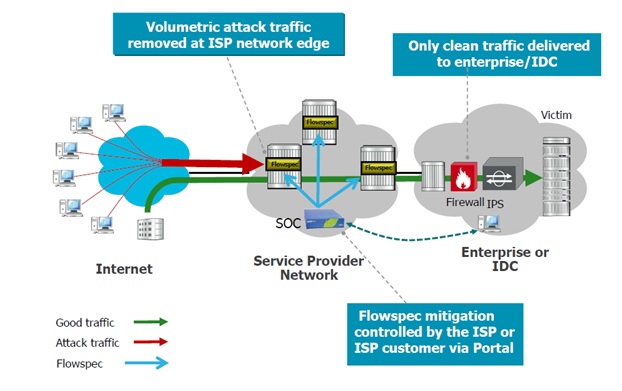

First off, Security Analytics (SA) is not a replacement for traditional security controls such as NGFW, IDS, IPS, AV, AMP, DLP or even SIEMs and other existing solutions, but can make them work better, by reconfiguring security controls based on SA outcome. For all of it to come together and a deeper truth on potential threats and current intrusions to emerge through Security Analytics, threat intelligence needs to be fused from multiple internal and external sources. Various levels of Indicators of Compromise (IOCs) need to be understood to capture earlier neglected artifacts and correlate behavior to detect potential threats and zero-day attacks, as traditional signature based approaches are no longer sufficient.

While use of Big Data technologies might be fundamental to any implementation of predictive analytics based on data science, it is not a sufficient condition to attain nirvana in the Security Analytics world. After wading through Big Data material, I’ve decided to keep it out of this discussion, as it doesn’t help define the essence of SA. One could as well scale up a SIEM or Log Management solution in a Big Data framework.

Now that you have a rough idea about the scope of this blog post, let me deep dive into various SA technical approaches, introduce the concepts of Threat Intelligence, IOCs and related standardization efforts, and finally present a Security Analytics architectural framework that could tie all of these together.

How have vendors implemented Security Analytics?

With Gartner yet to acknowledge that a market exists for Security Analytics – its consultants seem to be good with Threat Intelligence Management Platforms (TIMP) – I chose to be guided by the list of ‘Companies Mentioned’ here by ResearchAndMarkets, and additionally explore dominant security players (Palo Alto Networks, Fortinet) who were missing in this list, to check if there is anything promising cooking in this space. And, here is what I found. Less than a handful of these vendors have ventured into predictive analytics and self-learning systems, using advanced data science. Most others in the market offer enhanced SIEM and unified cyber forensic solutions – that can consume threat intelligence feeds and/or operate on L2-L7 captures – as a Security Analytics package, though it is certainly a step forward. The intent of this section is to offer a glimpse into technology developments in the Security Analytics space. I doubt that my research was exhaustive [as I mostly found product pitches online that promise the world to potential undiscerning customers], and would be glad to make the picture a little more complete, if you could educate me on what is going on in your firm in this space, if I’ve missed any game changer!

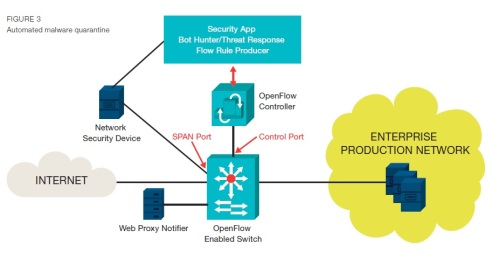

All SA products consume and manage data in some manner, be they log data, file data, full network packet captures, and/or behavioral data from systems or networks, by integrating with other security devices and solutions in the deployment. However, there must be intelligence in the system that helps render those data into something useful. Vendors have implemented this intelligence into SA via deep inspection of L2-L7 exchanges, anomaly sensors to spot behavior deviations, diamond model of intrusion analysis, game theory and/or advanced machine learning algorithms, potentially based on Campaigns which is a “collection of data, intelligence, incidents, events or attacks that all share some common criteria and can be used to track the attacker or attack tactic” [sic] [I’m going to wait for this concept to evolve and become commonplace, before I remark on this]. I’ve used sample vendor offerings to elaborate on each of these SA implementation approaches. I’ve come across categorization of SA offerings as data analyzers vs. behavior analyzers, but I view these as building blocks for SA and not alternative solutions.

Deep data inspection – Solera Networks’ DeepSee platform (now acquired by BlueCoat) offers an advanced cyber forensics solution that reconstructs and analyzes L2-L7 data streams (captured by integrating with industry standard and leading security vendor offerings for routers, switches, next-gen firewalls, IPSs, SIEMs et al) for application classification and metadata extraction, indexed storage and analysis to provide accurate visibility into activities, applications and personas on the network. It is built on the premise that full visibility, context and content are critical to react to security incidents in real-time or back-in-time. I scoured the net to understand how it detects a breach (beyond what a scaled-up SIEM can do with similar stream captures and batch data stores) and thus goes beyond Cyber Forensics, but didn’t find any material. CyberTap Security’s (acquired by IBM) Recon boasts of a similar solution with ability to reassemble captured network data to its original form, be they documents, web pages, pictures, mails, chat sessions, VOIP sessions or social media exchanges.

Behavioral anomaly sensors – With APT attacks consisting of multiple stages – intrusion, command-and-control communication, lateral movement, data exfiltration, cover tracks and persist [below figure has further details on APT lifecycle] – each action by the attacker provides an opportunity to detect behavioral deviations from the norm. Correlating these seemingly independent events can reveal evidence of the intrusion, exposing stealthy attacks that could not be identified through other methods. These detectors of behavioral deviations are referred to as “anomaly sensors,” with each sensor examining one aspect of the host’s or user’s activities within an enterprise’s network. Interset and PFP Cybersecurity are among the limited vendors who’ve built threat detection systems based on behavioral analytics, as reported here.

Figure – Lifecycle of Advanced Persistent Threats (Source: Sophos)

Cognitive Security (research firm funded by US Army, Navy and Air Force – now acquired by Cisco) stands out as it relies on advanced statistical modeling and machine learning to independently identify new threats, continuously learn what it sees, and adapt over time. This is a good solution to deep dive into, to understand how far any vendor has ventured into Security Analytics. It offers a suite of solutions that offer protection through the use of Network Behavior Analysis (NBA) and multi-stage Anomaly Detection (AD) methodology by implementing Cooperative Adaptive Mechanism for Network Protection (CAMNEP) algorithm for trust modeling and reputation handling. It employs Game Theory principles to ensure that hackers cannot predict or manipulate the system’s outcome, and compares current data with historical assessments called trust models to maintain a highly sensitive and low false positive detection engine. This platform utilizes standard NetFlow/IPFIX data and is said to deploy algorithms such as MINDS, Xu et al., Volume prediction, Entropy prediction and TAPS. It does not require supplementary information such as application data or user content and so ensures user data privacy and data protection throughout the security monitoring process. To reiterate, this is a passive self-monitoring and self-adapting system that complements existing security infrastructure. Cylance, among SINET’s Top 16 emerging Cybersecurity companies of 2014 as reported here, seems to have also made some headway in this artificial intelligence based SA approach.

Zipping past A-to-Z of Threat Intelligence

Definition – Here is how Gartner defines Cyber Threat Intelligence (CTI) or Threat Intelligence (TI) – “Evidence-based knowledge, including context, mechanisms, indicators, implications and actionable advice about an existing or emerging menace or hazard to assets that can be used to inform decisions regarding the subject’s response to that menace or hazard.”

How does TI help? – Clearly, TI is just not indicators, and can be gathered by human analysts or automated systems, and from internal or external sources. Such TI could help identify misuse of any corporate assets (say as botnets), detect data exfiltration and prevent leakage of further sensitive information, spot compromised systems that communicate with C2 (i.e. Command-and-Control aka CnC) servers hosted by malicious actors, detect targeted and persistent threats missed by other defenses and ultimately initiate remediation steps, or most optimistically – stop the attacker in his tracks.

How to gather TI? – A reliable source of TI is one’s own network – information from security tools such as firewalls, IPS et al, network monitoring and forensics tools, malware analysis through sandboxing and other detailed manual investigations of actual attacks. Additionally, it can be gleaned through server and client honeypots, spam and phishing email traps, monitoring hacker forums and social networks, Tor usage monitoring, crawling for malware and exploit code, open collaboration with research communities and within the industry for historical information and prediction based on known vulnerabilities.

TI external sources could either be (a) open-source or commercial, and (b) service or feed providers. These providers could also differ on how they glean raw security data or threat intelligence as categorized below:

- Those who have a large installed base of security or networking tools and can collect data directly from customers, anonymize it, and deliver it as threat intelligence based on real attack data. E.g. Blue Coat, McAfee Threat Intelligence, Symantec Deepsight, Dell SecureWorks, Palo Alto Wildfire, AlienVault OTX

- Those who rely heavily on network monitoring to understand attack data. These providers have access to monitoring tools that sit directly on the largest internet backbones or in the busiest data centers, and so they are able to see a wide range of attack data as it flows from source to destination. E.g. Verisign iDefense, Norse IPViking/Darklist, Verizon

- Few intelligence providers focus on the adversary, track what different attack groups are doing and closely monitor their campaigns and infrastructure. This type of intelligence can be invaluable because adversary focused intelligence can be proactive, knowing that a group is about to launch an attack allows their customers to prepare before the attack is launched. E.g. of these TI service providers who focus on manual intelligence gathering by employing human security experts are iSIGHT Partners, FireEye Mandiant, CrowdStrike

- Open source intelligence providers who typically crowd source. The best open source TI providers typically focus on a given threat type or malware family. E.g. Abuse.ch which tracks C2 servers for Zeus, SpyEye and Palevo malware while combining domain name blocklists. Other best open sources of TI are Blocklist.de, Emerging Threats, Spamhaus. ThreatStream OPTIC intelligence is community vetted but not open source.

- Academic/research communities such as Information Sharing and Analysis Centers (ISACs), Research and Education Networking (REN) ISAC, Defense Industrial Base Collaborative Information Sharing Environment (DCSIE)

Other TI sources of manual/cloud feeds include – malware data from VirusTotal, Malwr.com, VirusShare.com, ThreatExpert; National Vulnerability Database; Tor which provides a list of Tor node IP addresses; and others such as OSINT, SANS, CVEs, CWEs, OSVDB, OpenDNS. Few other commercial vendors include Vorstack, CyberUnited, Team Cymru and Recorded Future.

Indicators of Compromise – The Basics

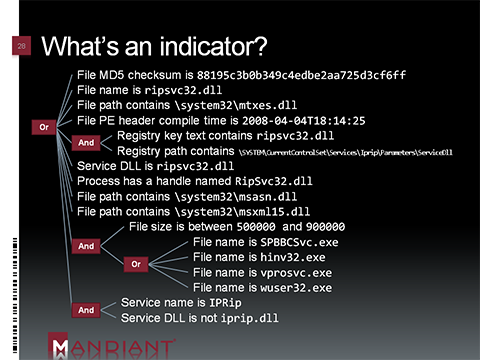

Indicators of Compromise aka IOC denote any forensic artifact or remnant of an intrusion that can be identified on a host or network, with the term ‘artifact’ in the definition allowing for observational error. Indicators could take the form of – IP addresses of C2 servers, domain names, URLs, registry settings, email addresses, HTTP user agent, file mutex, file hashes, compile times, file size, name, path locations etc. Different types of indicators can be combined together in one IOC [as illustrated in the below figure].

Figure – Indicators of Compromise aka IOC (Source: Mandiant.com)

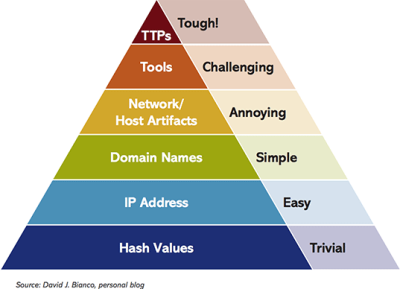

The below pyramid stacks up the various indicators one can use to detect an adversary’s activities and how much effort it would take for the adversary to pivot and continue with the planned attack, when indicators at each of these levels are denied.

Figure – Pyramid of Pain with IOCs (Image Source: AlienVault)

Starting at the base of the pyramid where the adversary’s pain is the lowest if detected and denied, we have Hash Values such as SHA1 or MD5 which are often used to uniquely identify specific malwares or malicious files involved in an intrusion. The adversary could potentially change an insignificant bit and cause a different hash to be generated, thus making our earlier detected hash IOC ineffective, unless we move to fuzzy hashes.

Next up in the pyramid are IP addresses. These again might not take long for the adversaries to recover from, as they can change the IP address with little effort. If they were to use an anonymous proxy service like Tor, then this indicator has no effect on the adversary. In comparison, Domain Names are slightly harder to change than IP addresses as they must be registered and visible in the Internet, but still doable though it might take a day or two for any adversary.

Looking at it from an IoC usage perspective in security deployments, the TTL of an IP address can be very low. Compromised hosts in legitimate networks could get patched, illicitly acquired hosting space might be turned off, malicious hosts are quickly identified and blocked, or the traffic might be black holed by the ISP. An IP address may have a TTL of 2 weeks, while domains and file hashes would have significantly longer TTLs.

Typical examples of Network Artifacts are URI patterns, C2 information embedded in network protocols, distinctive HTTP User-Agent or SMTP Mailer values. Host Artifacts could be registry keys or values known to be created by specific pieces of malware, files or directories dropped in certain places or using certain names, names or descriptions or malicious services or almost anything else that is distinctive. Detecting an attack using network/host artifacts can have some negative impact on the adversary, as it requires them to expend effort and identify which artifact has revealed their approach, fix and relaunch it.

Further up in the pyramid, we have Tools which would include utilities designed – say to create malicious documents for spearphishing, backdoors used to establish C2 communication, or password crackers and other host-based utilities they might want to use post their successful intrusion. Some examples of tool indicators include AV or YARA signatures, network aware tools with a distinctive communication protocol and fuzzy hashes. If the tool used by adversaries has been detected and the hole has been plugged, they have to find or create a new tool for the same purpose which halts their stride.

When we detect and respond to Tactics, Techniques and Procedures (TTPs), we operate at the level of the adversaries’s behavior and tendencies. By denying them any TTP, we force them to do the most time consuming thing possible – learn new behaviors. To quote a couple of examples – Spearphishing with a trojaned PDF file or with a link to a malicious .SCR file disguised as a ZIP, and dumping cached authentication credentials and reusing them in Pass-the-Hash attacks are TTPs.

There are a variety of ways of representing indicators – e.g. YARA signatures are usually used for identifying malicious executables, and Snort is used for identifying suspicious signatures in network traffic. Usually these formats specify not only ways to describe basic notions but also logical combinations using boolean operators. YARA is an open source tool used to create free form signatures that can be used to tie indicators to actors, and allows security analysts to go beyond the simple indicators of IP addresses, domains and file hashes. YARA also helps identify commands generated by the C2 infrastructure.

Sharing IOCs across organizational boundaries will provide access to actionable security information that is often peer group or industry relevant, support an intelligence driven security model in organizations, and force threat actors to change infrastructure more frequently and potentially slow them down.

Exchanging Threat Intelligence – Standards & Tools

Effective use of CTI is crucial to defend against malicious actors and thus important to ensure an organization’s security. To gain real value from this intelligence, it has to be delivered and used fairly quickly if not in real-time, as it has a finite shelf life with threat actors migrating to new attack resources and methods on an ongoing basis. In the last couple of years, there has been increased effort to enable CTI management and sharing within trusted communities, through standards for encoding and transporting CTI.

Generally, indicator based intelligence includes IP addresses, domains, URLs and file hashes. These are delivered as individual black lists or aggregated reports via emails or online portals, which are then manually examined and fed by analysts into the recipient organization’s security infrastructure. In certain cases, scripts are written to bring in data from VirusTotal and other OSINT platforms directly into heuristic network monitors such as Bro.

Let me touch upon various CTI sharing standards – OpenIOC, Mitre package (CybOX, STIX, TAXII), MILE package (IODEF, IODEF-SCI, RID) and VERIS – that are aimed at doing away with the above TI sharing inefficiencies.

OpenIOC is an open source, extensible and machine-digestible format to store IOC definitions as XML schema and share threat information within or across organizations. This standard provides the richest set of technical terms (over 500) for defining indicators and allows for nested logical structures, but is focused on tactical CTI. The standard was introduced and primarily used in Mandiant products, but can be extended by other organizations by creating and hosting an Indicator Term Document. There has been limited commercial adoption outside of Mandiant, with McAfee among the minority vendors with products that can consume OpenIOC files. MANDIANT IOC Editor, Mandiant IOC Finder and Redline are tools that can be used to work with OpenIOC.

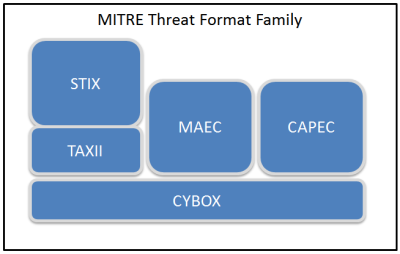

Mitre has developed three standards that are designed to work together and enable CTI sharing – Cyber Observable eXpression (CybOX), Structured Threat Information Expression (STIX) and Trusted Automated eXchange of Indicator Information (TAXII). With STIX being accepted by industry leaders, STIX and TAXII are starting to see wide adoption. Common Attack Pattern Enumeration and Classification (CAPEC) and Malware Attribute Enumeration and Characterization (MAEC) are focused on attack patterns and malware analysis respectively.

Figure – Threat Intelligence Formats in Mitre Family (Source: Bit9.com)

- CybOX provides the ability to automate sharing of security intelligence by defining 70 objects (e.g. file, mutex, HTTP session, network flow) that can be used to define measurable events or stateful properties (e.g. file hashes, IPs, HTTP GET, registry keys). Objects defined in CybOX can be used in higher level schemas like STIX. While OpenIOC can effectively represent only CybOX objects, CybOX also understands the notion of events which enables it to specify event order or elapsed time, and bring in the notion of behaviors.

- STIX was designed to additionally provide context for the threat being defined through observable patterns, and thus covers the full range of cyber threat information that can be shared. It uses XML to define threat related constructs such as campaign, exploit target, incident, indicator, threat actor and TTP. In addition, extensions have been defined with other standards such as TLP, OpenIOC, Snort and YARA. The structured nature of the STIX architecture allows it to define relationship between constructs. E.g. the TTP used can be related to a specific threat actor.

- TAXII provides a transport mechanism to exchange CTI in a secure and automated manner, through its support for confidentiality, integrity and attribution. It uses XML and HTTP for message content and transport, and allows for custom formats and protocols. It supports multiple sharing models including variations of hub-and-spoke or peer-to-peer, and push or pull methods for CTI transfer.

Managed Incident Lightweight Exchange (MILE), an IETF group, works on the data format to define indicators and incidents, and on standards for exchanging data. This group has defined a package of standards for CTI which includes Incident Object Description and Exchange Format (IODEF), IODEF for Structured Cyber Security Information (IODEF-SCI), and Real-time Inter-network Defense (RID) which is used for communicating CTI over HTTP/TLS. IODEF is an XML based standard used to share incident information by Computer Security Incident Response Teams (CSIRTs) and has seen some commercial adoption e.g. from HP ArcSight. IODEF-SCI is an extension to the IODEF standard that adds support for attack pattern, platform information, vulnerability, weakness, countermeasure instruction, computer event log, and severity.

Vocabulary for Event Recording and Incident Sharing (VERIS) VERIS framework from Verizon has been designed for sharing strategic information and an aggregate view of incidents, but is not considered to be a good fit for sharing tactical data.

Many vendors and open source communities have launched platforms to share TI. e.g. AlienVault’s Open Threat Exchange (OTX), Collective Intelligence Framework (CIF), IID’s ActiveTrust. OTX is a publicly available sharing service of TI gleaned from OSSIM and AlienVault deployments. CIF is a client/server system for sharing TI which is internally stored in IODEF format, and provides feeds or allows searches via CLI and RESTFUL APIs. CIF is capable of exporting CTI for specific security tools. IID ActiveTrust platform is leveraged by government agencies and enterprises to confidently exchange TI and coordinate responses between organizations.

Unifying Security Intelligence & Analytics – The OpenSOC framework

So, how do organizations use Threat Intelligence that I’ve talked about at length? With Threat Intelligence coming in from a variety of sources and in multiple formats (even if each of these are standardized), a new solution being floated in the market is the Threat Intelligence Management platform (TIMP) or Threat Management Platform (TMP) – which has been tasked to parse incoming intelligence and translate it into formats as understood by various security solutions (e.g. malware IPs into NIDS signatures, email subjects into DLP rules, file hashes into ETDR/EPP/AV rules, Snort rules for IPS, block lists and watch lists for SIEMs/AS, signatures for AV/AM etc.), to make it suitable for dissemination. TI can also be uploaded into a SIEM for monitoring, correlation and alerting, or to augment any analysis with additional context data.

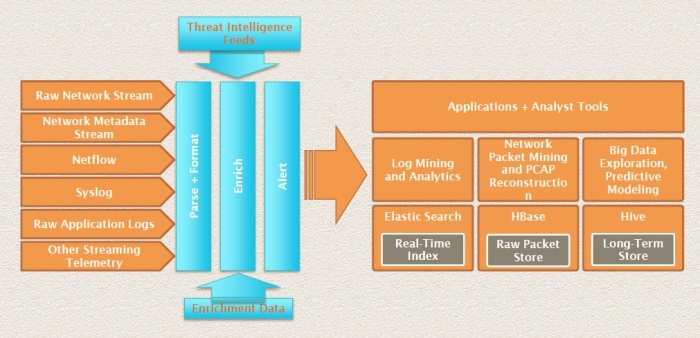

Now that I’ve tied up one dangling thread, what about Security Analytics? Having zoomed in early on in this blog post on what any SA operates on and how its output could better security controls in a deployment, I’ll provide a 30,000-40,000ft view (at cruising altitude?) this time around, by introducing the OpenSOC, an unified data-driven security platform that combines data ingestion, storage and analytics.

Figure – OpenSOC framework

The OpenSOC (Open Security Operations Center) framework provides the building blocks for Security Analytics to (1) capture, store, normalize and link various internal security data in real-time for forensics and remediation, (2) enrich, relate, validate and contextualize earlier processed data with threat intelligence and geolocation to create situational awareness and discover new threats in a timely manner, and (3) provide contextual real-time alerts, advanced search capabilities and full packet extraction tools, for a security engine that implements Predictive Modeling and Interactive Analytics.

The key to increasing the ability to detect, respond and contain targeted attacks is a workflow and set of tools that allows threat information to be communicated across the enterprise at machine speed. With OpenSOC being an open source solution, any organization can customize the sources and amount of security telemetry information to be ingested from within or outside the enterprise, and also add incident detection tools to suit its tailored Incident Management and Response workflow.

I’ve treaded on uncertain ground in navigating the product-market for Security Analytics, given that it is still nascent and the product category isn’t well delineated. Would welcome any views on this post, be they validating or contradicting mine.